In the last few years, Robert J. Gordon, a professor of economics at Northwestern University, has persistently argued against the trendy view of the moment that robots, AI and other ‘disruptive technologies’ are about to launch the global economy into a productivity boost never seen before. I have commented before on Gordon’s series of papers developing this proposition.

And very recently, Gordon presented his arguments yet again at ASSA 2016 when he critiqued a paper by David Kotz and Deepankur Basu at an URPE session.

As Gordon says succinctly in that critique: “The evidence accumulates every quarter to support my view that the most important contributions to productivity of the digital revolution are in the past, not in the future. The reason that business firms are spending their money on share buy-backs instead of plant and equipment investment is that the current wave of innovation is not producing novelty sufficiently important to earn the required rate of return.”

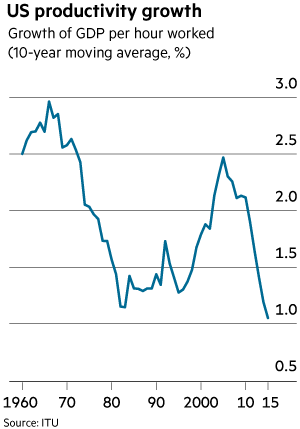

The arrival of the internet and mobile phones has failed to generate a sustained upturn in the growth of productivity. Output per hour worked in the US grew at rate of 3 per cent a year in the 10 years up to 1966, after which the growth rate declined, falling to just 1.2 per cent in the 10 years to the early 1980s. After the launch of the worldwide web, the moving average rose to 2.5 per cent in the 10 years to 2005. But it then fell to just 1 per cent in the decade to 2015. A decomposition of the sources of growth in productive capacity underlines the point. Over the 10 years up to and including 2015, the average growth of “total factor productivity” in the US — a measure of innovation — was only 0.3 per cent a year. Productivity recently has been growing well below the 2.1 percent average gains seen over the past 67 years.

So, according to Gordon, the great new innovatory productivity enhancing paradigm that is supposedly coming from the digital revolution is actually over already and the future robot/AI explosion will not change that. On the contrary, far from faster economic growth and productivity, the world capitalist economy is slowing down as a product of slower population growth and productivity.

Now Gordon has compiled all his ideas and retorts to those who have disagreed into a new book, The Rise and Fall of American Growth. “This book,” Gordon writes in the introduction, “ends by doubting that the standard of living of today’s youths will double that of their parents, unlike the standard of living of each previous generation of Americans back to the late 19th century.” Gordon predicts that innovation will trundle along at the same pace of the last 40 years. Despite the burst of progress of the Internet era from the 1990s, total factor productivity — which captures innovation’s contribution to growth — rose over that period at about one-third the pace of the previous five decades.

Gordon reckons that the American workforce will continue to decline, as aging baby boomers leave the work force and women’s labour supply plateaus. And gains in education, an important driver of productivity that expanded sharply in the 20th century, will contribute little. Moreover, the growing concentration of income means that whatever the growth rate, most of the population will barely share in its fruits. Altogether, Professor Gordon argues, the disposable income of the bottom 99% of the US population, which has expanded about 2% per year since the late 19th century, will expand over the next few decades at a rate little above zero.

Gordon’s argument about productivity growth in the recent past has been confirmed by the evidence, as John Fernald at the San Francisco Fed has shown. And Fernald has argued elsewhere “Growth in educational attainment, developed-economy R&D intensity and population are all likely to be slower in the future than in the past.”

Even Ben Bernanke, the former chairman of the US Federal Reserve, who is now at the Brookings Institution, has sympathy with Professor Gordon’s proposition. “People who invest money in the markets are saying the rate of return on capital investments is lower than it was 15 or 30 years ago,” Mr. Bernanke said. “Gordon’s forecast is not without some market reality.” Other strands of data point in this direction. Business dynamism, measured by the role of new companies in the economy, appears to be waning. The share of employment in companies less than five years old dropped from about 19 percent in 1982 to 11 percent in 2011.

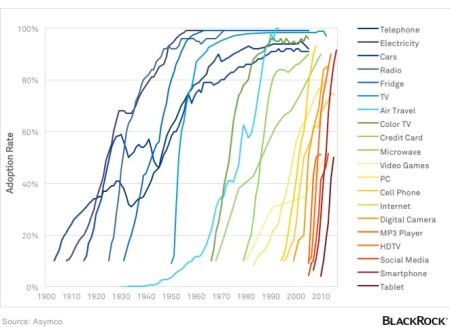

It’s a grim picture that Gordon paints for the future of US capitalism and thus the world. But is it right? The argument against it is that people are adopting new technologies, including tablets and smartphones at the swiftest pace we’ve seen since the advent of the television. While television arguably detracted from US productivity, today’s advances in technology are generally geared toward greater efficiency at lower costs and so will boost the productivity growth of labour (see Blackrock’s diagram below of the adoption rate of current new technologies).

Some argue, against Gordon, that statisticians are failing to measure output correctly, partly by failing to capture free services, such as search, which generate vast unmeasured surplus value. But as Martin Wolf of the FT pointed out recently “it is not at all clear why statisticians should have suddenly lost their ability to measure the impact of new technologies in the early 2000s. Again, most (past) new technologies have also generated vast unmeasured surplus value. Think of the impact of electric light on the ability to study.”

Nevertheless, balanced against Gordon are a myriad of techno-optimists and economists who reckon that the world is on the brink of a productivity explosion driven by robots, artificial intelligence, genetics, and a range of new ‘disruptive technologies’ – disruptive in the sense that traditional jobs and functions are going to disappear and be replaced by robots and algorithms. The optimists argue that, since the time of Thomas Malthus, eras of depressed expectations like our own have inspired predictions of doom and gloom that were proved wrong when economies turned up a few years down the road.

Gordon’s main opposition comes from Professor Joel Mokyr who works with Gordon at Northwestern University. . What Professor Gordon fails to account for, Professor Mokyr argues, is that the information technology revolution and other recent developments have produced mind-blowing tools and techniques, from gene-sequencing machines to computers that analyze mountains of data at blistering speed. This is creating vast new opportunities for innovation, from health care to materials technology and beyond. “The tools available to science have been improving at a dazzling rate,……I’m not sure how, but the world of technology in 30 to 40 years’ time will be vastly different than it is today.”

Every day there is a new story in the media about how people and their skills are or will soon be replaced by machines and computer software that learns for itself. At this year’s World Economic Forum, the annual meeting of the global elite of bankers, politicians, corporate chiefs and military, in Davos Switzerland, the main theme was the ‘Fourth Industrial Revolution’. Advances in robotics and artificial intelligence would have the transformative effect that steam power, electricity and ubiquitous computing achieved in previous centuries.

At Davos, the elite were told by Sebastian Thrun, the inventor of Google’s self-driving cars and an honorary professor at Delft University of Technology, that “almost every established industry is not moving fast enough” to adapt their businesses to this change. He suggested self-driving cars would make millions of taxi drivers redundant and planes running solely on autopilot would remove the need for thousands of human pilots. However, don’t worry, as Thrun was optimistic that redundant roles will quickly be replaced. “With the advent of new technologies, we’ve always created new jobs,” he said.

As one of the most prominent observers of the new ‘industrial revolution, Erik Brynjolfsson, the Massachusetts Institute of Technology professor and co-author of “The Second Machine Age”, put it “We’re moving to a world where there will be vastly more wealth and vastly less work.” But he went on “I think the biggest immediate change will be a move away from … one person [staying] in one profession or one job during their lifetime… That shouldn’t be a bad thing, and shame on us if we turn it into a bad thing.”

Such optimism contrasted with the WEF’s own book launched at Davos, that reckoned increased automation and AI in the workforce will lead to the loss of 7.1m jobs over the next five years in 15 leading economies, while helping create just 2m new jobs over the same period. In the financial sector, a thinking, learning and trading computer may well make even today’s superfast, ultra-complex investment algorithms look archaic — and possibly render human fund managers redundant. You might say we don’t’ care too much about losing hedge fund managers. But AI and robots will destroy the jobs of millions in productive sectors and on much less money. This is the prospect for labour in a robot-led capitalism.

It’s not just the loss of jobs for millions that is the prospect from AI/robots, but some argued that AI threatens to existence of humanity itself. Ray Kurzweil, the American inventor and futurist, has predicted that by 2045 the development of computing technologies will reach a point at which AI outstrips the ability of humans to comprehend and control it. Stephen Hawking has argued that “the development of full artificial intelligence could spell the end of the human race”. And Elon Musk, the founder of SpaceX and Tesla Motors, believes that artificial intelligence is “potentially more dangerous than nukes”. The “biggest existential threat” to humanity, he thinks, is a Terminator-like super machine intelligence that will one day dominate humanity.

Moreover, while computers are quicker, smarter and shorn of human behavioural biases, they come with their own weaknesses. Disaster can strike quickly. For example, Knight Capital, a high-frequency trading firm, imploded in 2012 when its computers ran amok, in practice losing $10m a minute in a devastating 45-minute trading blitz. As Gavekal, an investment brokerage, acerbically noted at the time: “Sometimes all computers do is replace human stupidity with machine stupidity. And, thanks to speed and preprogrammed conviction, machine stupidity can devour markets far faster than any human panic can achieve.” Algos based on artificial intelligence techniques may be the next generation of quantitative finance, but even industry insiders say they can unravel when confronted with the chaotic reality of markets. “This stuff in the hands of the wrong people can be very dangerous,” says Tom Doris, the head of Otas Technologies. For example, self-driving cars suffered twice as many accidents as human-driven ones in 2013, according to a University of Michigan study. Most of them were minor scrapes, and the human drivers were at fault in every case, but this is a vivid illustration of how accidents can happen when man meets machine — whether on the road or in markets.

But can robots really replace humans within 30 years? Many doubt it. Scenarios such as Kurzweil’s are extrapolations from Moore’s law, according to which the number of transistors in computers doubles every two years, delivering greater and greater computational power at ever-lower cost. But Gordon Moore, after whom Moore’s law is named, has himself acknowledged that his generalisation is becoming unreliable because there is a physical limit to how many transistors you can squeeze into an integrated circuit. In any case, Moore’s law is a measure of computational power, not intelligence. A vacuum-cleaning robot, a Roomba, will clean the floor quickly and cheaply and increasingly well, but it will never book a holiday for itself with my credit card.

Luciano Floridi at the University of Oxford agrees that machines can do amazing things, often better than humans. For instance, IBM’s Deep Blue computer played and beat the Grand Master Garry Kasparov at chess in 1997. In 2011, another IBM machine, Watson, won an episode of the TV quiz show Jeopardy, beating two human players, one of whom had enjoyed a 74-show winning streak. But Deep Blue and Watson are versions of the “Turing machine”, a mathematical model devised by Alan Turing which sets the limits of what a computer can do. A Turing machine has no understanding, no consciousness, no intuitions — in short, nothing we would recognise as a mental life. It lacks the intelligence even of a mouse.

Floridi explains that in 1950 Turing proposed the following test. Imagine a human judge who asks written questions to two interlocutors in another room. One is a human being, the other a machine. If, for 70 per cent of the time, the judge is unable to tell the difference between the machine’s output and the human’s, then the machine can be said to have passed the test. Turing thought that computers would have passed the test by the year 2000. He was wrong. Eric Schmidt, the former chief executive of Google, believes that the Turing test will be passed by 2018.. So far there has been no progress.

Computer programs still try to fool judges by using tricks developed in the 1960s. For example, in the 2015 edition of the Loebner Prize, an annual Turing test competition, a judge asked: “The car could not fit in the parking space because it was too small. What was too small?” The software that won that year’s consolation prize answered: “I’m not a walking encyclopaedia, you know.”

Where do I stand on this debate: are we entering a new industrial revolution like the early 19th century that will give capitalism a new lease of life in developing the productive forces, even if it means loss of jobs for hundreds of millions and rising inequality of income and wealth? Or are the new ‘disruptive technologies’ just a mirage that will change little in increasing economic growth and productivity, as Gordon argues? I think it is both, depending on the time and the cyclical eruptions that is the capitalist mode of production.

I have great sympathy with the Gordon’s view, but with reservations on timing. I’m not so sure that emerging capitalism cannot provide a new period of capitalist development, if the end of this long depression does not lead to the replacement of the capitalist mode of production by political action from energised working class movements. Also, it is by no means certain that mature capitalism cannot still develop and exploit new technologies, even if it has failed so far, in areas like robotics, artificial intelligence, 3d printing and nanotechnology. Indeed, some argue that US technology in developing shale oil and gas will shift the balance of economic power in energy back towards North America and the mature capitalist economies and away from the Middle East and Asia.

In the third section of Gordon’s book, he looks at why productivity growth did soar at one particularly notable juncture in the 1930s. Gordon reckons that the Great Depression was a period of innovation that “directly contributed to the great leap” in the 1940s. Gordon also points to the “high-pressure learning-by-doing that occurred during the high-pressure economy of World War II.” World War II gave America its first jet aircraft (the Bell P-59), mass-produced penicillin and nuclear power. Perhaps even more important, factories like Henry Kaiser’s shipyards taught managers and workers how to radically speed production. Something similar could happen when this Long Depression ends, as it will.

Also, capitalism could get a further kick forward from exploiting the hundreds of millions coming into the labour forces of Asia, South America and the Middle East. This would be a classic way of compensating for the falling rate of profit in the mature capitalist economies. While the industrial workforce in the mature capitalist economies has shrunk to under 150m, as unproductive labour has risen sharply; in the so-called emerging economies the industrial workforce now stands at 500m, having surpassed the industrial work force in the imperialist countries by the early 1980s. In addition, there is a large reserve army of labour composed of unemployed, underemployed or inactive adults of another 2.3bn people globally that could also be exploited for new value.

So there may be life in capitalism globally yet even if it is in ‘down mode’ right now. Or maybe this potential labour force will not be ‘properly exploited’ by the capitalist mode of production and Gordon is right. The world rate of profit (not just the rate of profit in the mature G7 economies) stopped rising in the late 1990s and has not recovered to the level of the golden age for capitalism in the 1960s, despite the massive potential global labour force. It seems that the countervailing factors of foreign investment in the emerging world, combined with new technology, have not been sufficient to push up the world rate of profit in the last decade or so, so far. The downward phase of the global capitalist cycle is still in play.

No comments:

Post a Comment